2024 Australian Survey Assessing Risks from AI

We conducted a representative survey of Australian adults to understand public perceptions of AI risks and support for AI governance actions in Australia.

Key Insights

A representative online Survey Assessing Risks from AI (SARA) of 1,141 Australians in January-February 2024 investigated public perceptions of AI risks and support for AI governance actions.

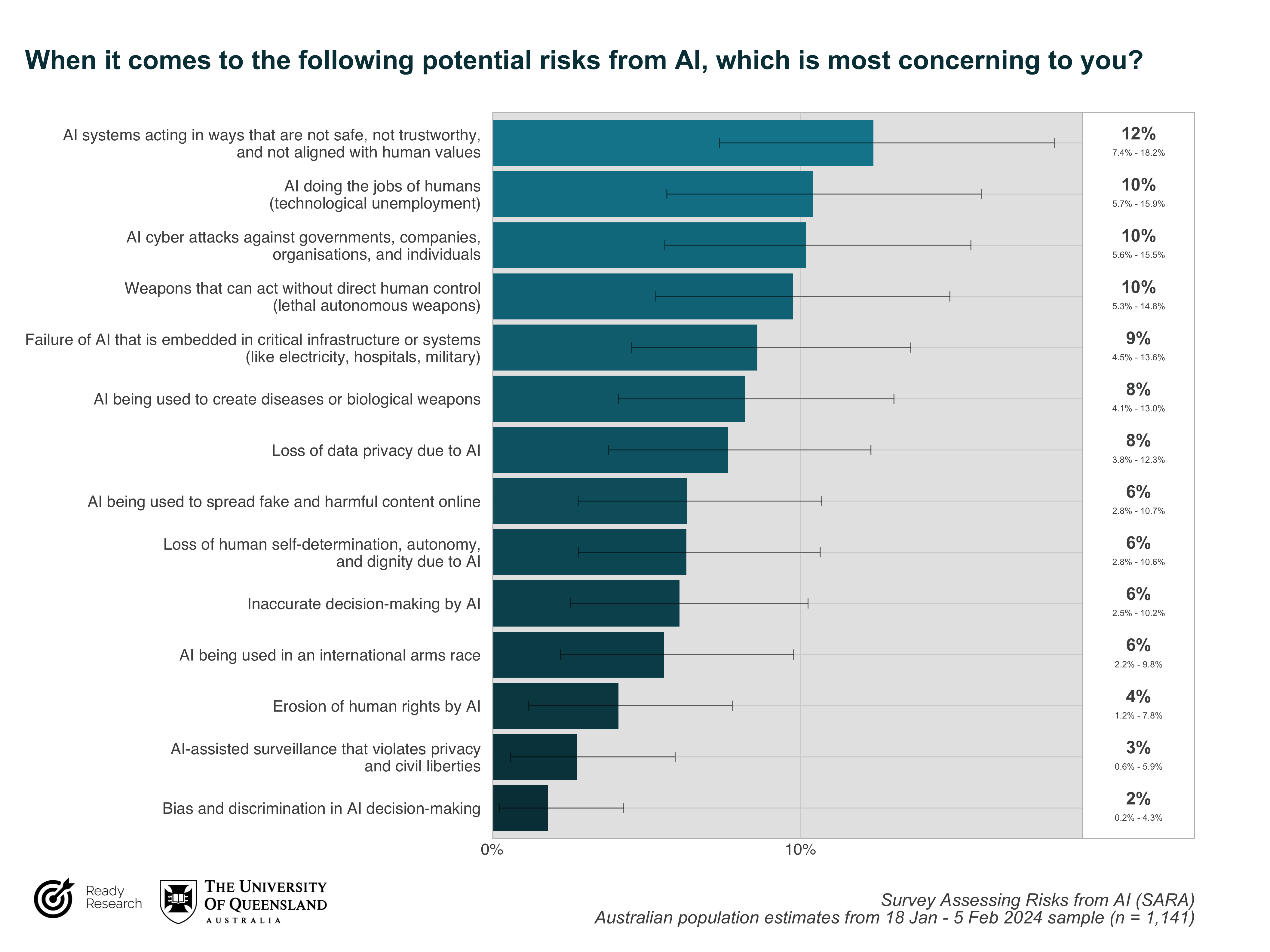

Australians are most concerned about AI risks where AI acts unsafely (e.g., acting in conflict with human values, failure of critical infrastructure), is misused (e.g., cyber attacks, biological weapons), or displaces the jobs of humans; they are least concerned about AI-assisted surveillance, or bias and discrimination in AI decision-making.

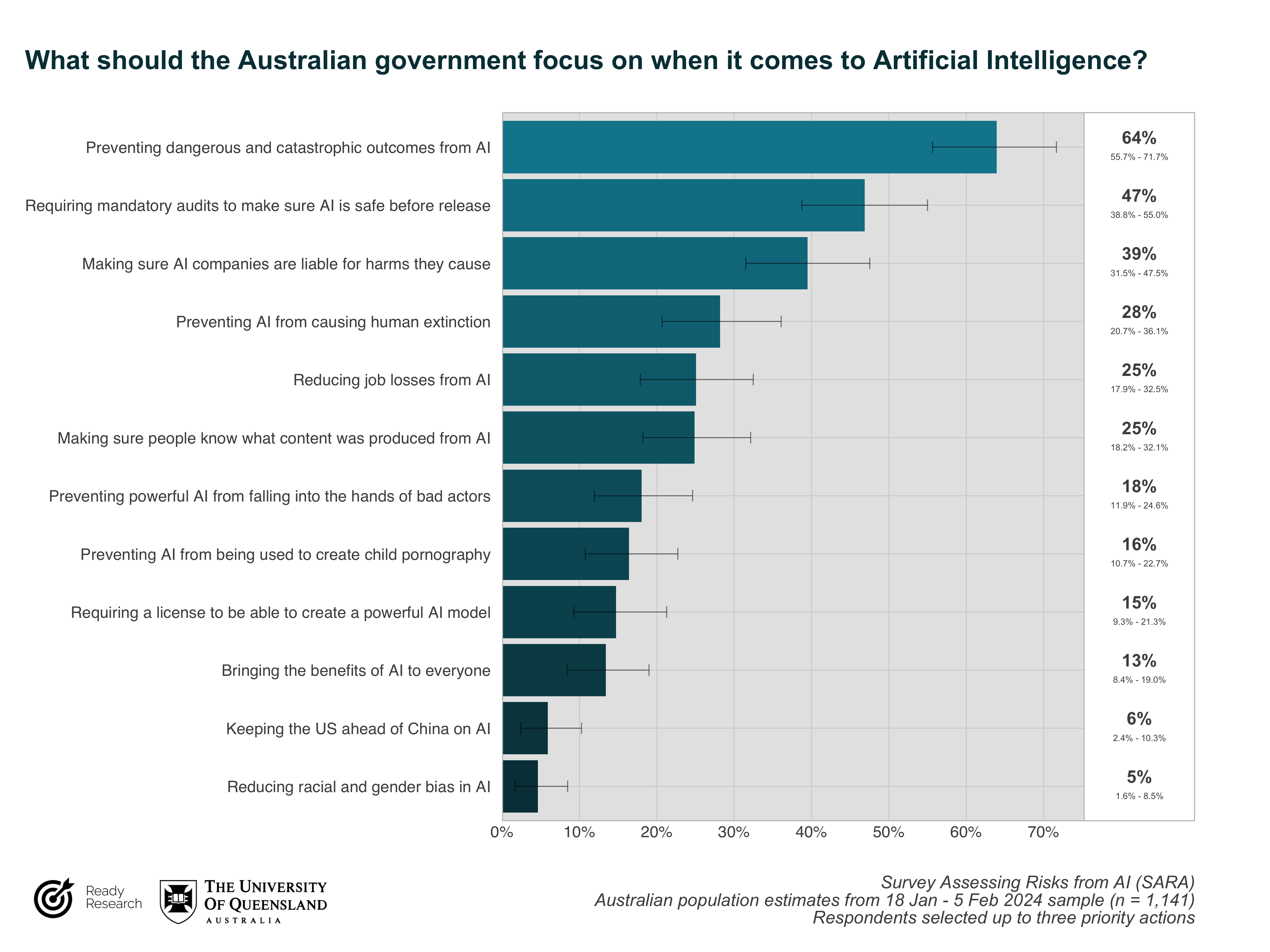

Australians judge “preventing dangerous and catastrophic outcomes from AI” the #1 priority for the Australian Government in AI; 9 in 10 Australians support creating a new regulatory body for AI.

The majority of Australians (8 in 10) support the statement that “mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war”.

Read the briefing

Read the technical report

2024 Survey Assessing Risks from AI - Technical Report (PDF)

2024 Survey Assessing Risks from AI - Technical Report (webpage)

Suggested citation: Saeri, A.K., Noetel, M., & Graham, J. (2024). Survey Assessing Risks from Artificial Intelligence: Technical Report. Ready Research, The University of Queensland.

Australians are concerned about diverse risks from AI

Australians support regulatory and non-regulatory action to address AI risks

About the Survey

Understanding public beliefs and expectations about artificial intelligence (AI) risks and their possible responses is important for ensuring that the ethical, legal, and social implications of AI are addressed through effective governance.

We conducted the Survey Assessing Risks from AI (SARA) to generate ‘evidence for action’, to help public and private actors make the decisions needed for safer AI development and use.

This survey investigates public concerns about 14 different risks from AI, from AI being used to spread fake and harmful content online, to AI being used for the creation of biological and chemical weapons; public support for AI development and regulation; and priority governance actions to address risks from AI (with a focus on government action).

We recruited 1,141 Australians through online representative quota sampling stratified by age, sex, and Australian state / territory. We also conducted multilevel regression with poststratification to construct more accurate population estimates based on 2021 Australian Census data.

This project is a collaboration between Ready Research and The University of Queensland. The project team is Dr Alexander Saeri, Dr Michael Noetel, and Jessica Graham.