Survey Assessing Risks from AI 2024

Understanding Australian public views on AI risks and governance

We conducted a representative survey of Australian adults to understand public perceptions of AI risks and governance actions.

Key Insights

Comparing surveys: View 2025 findings

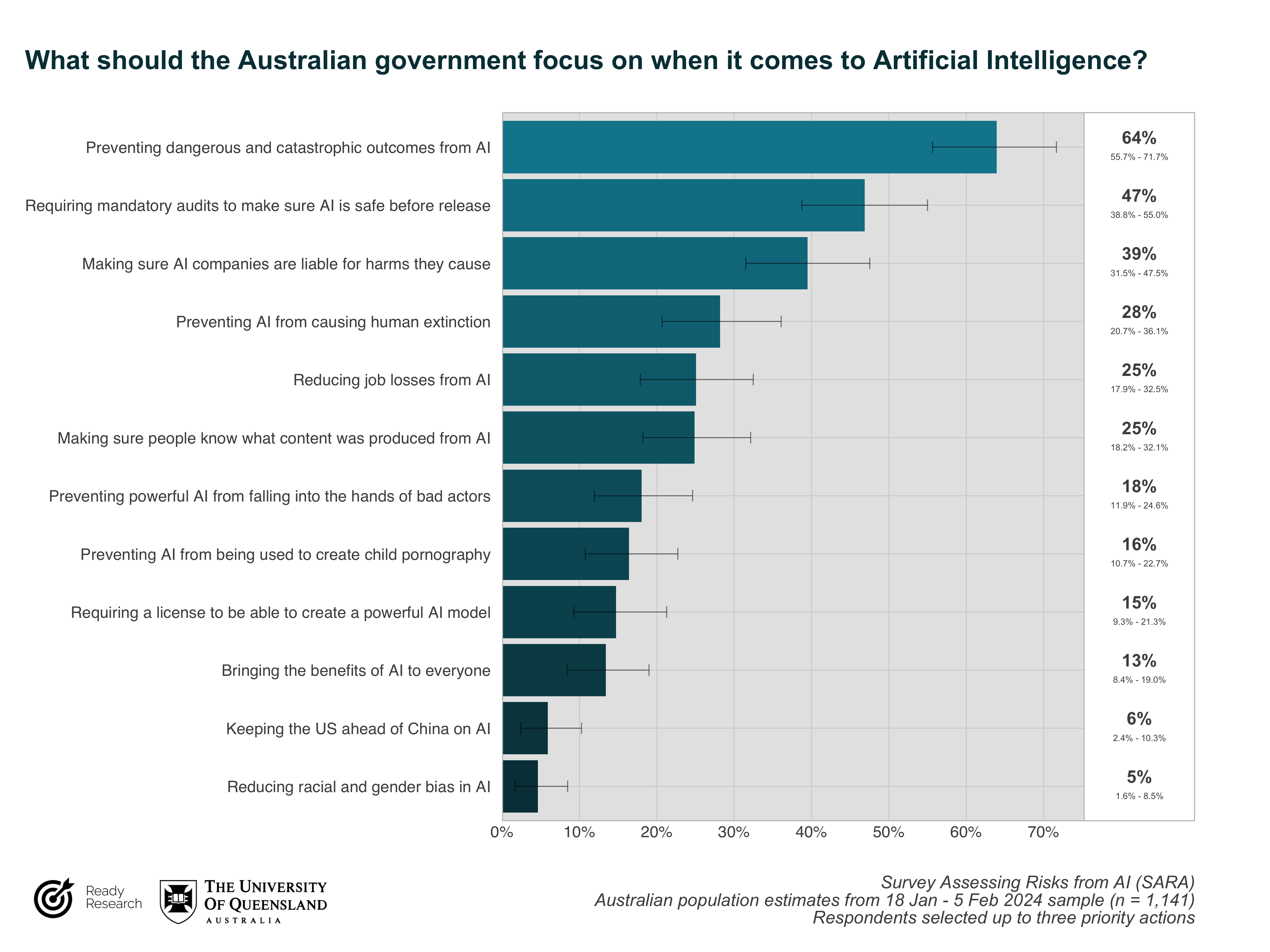

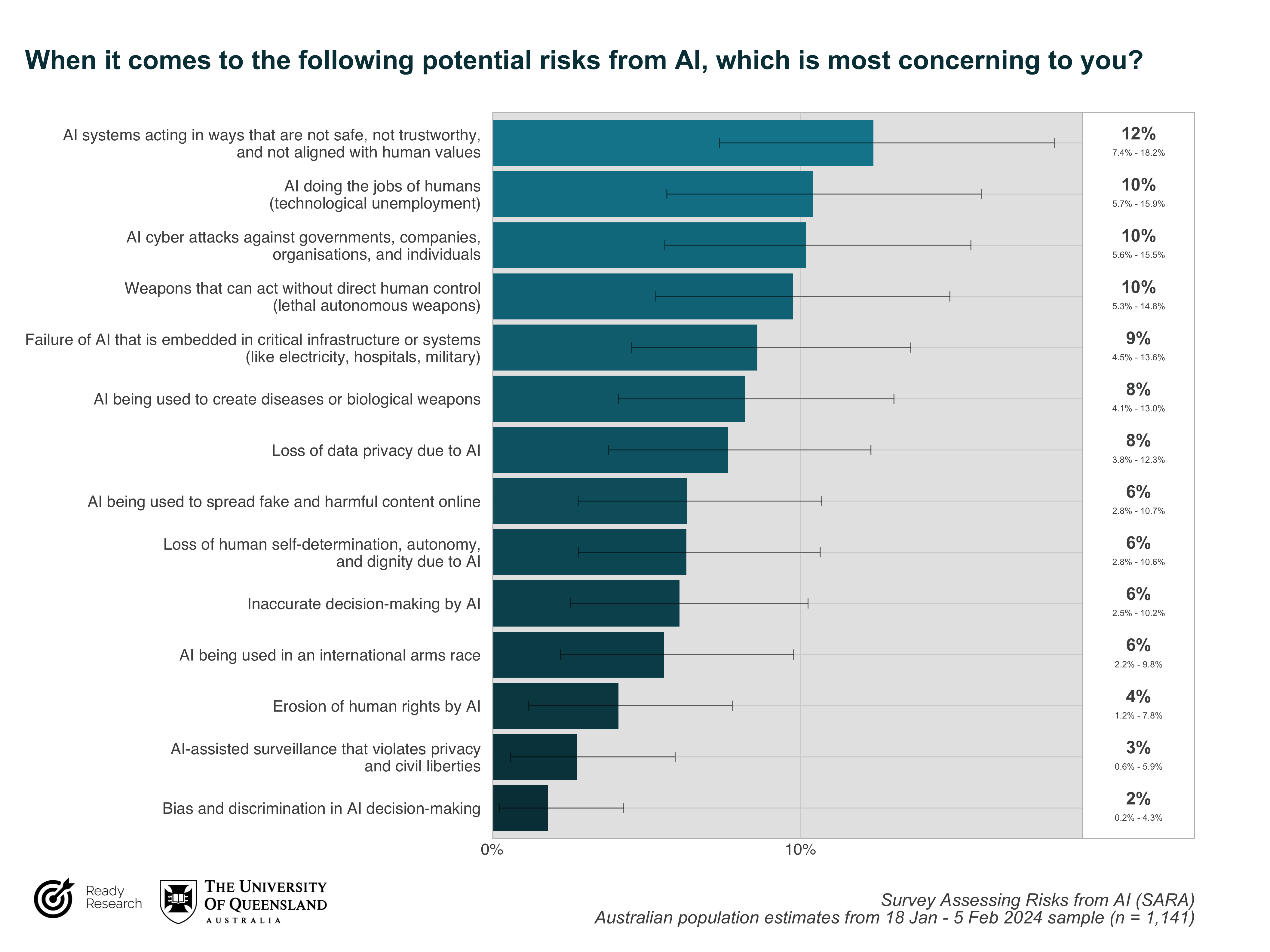

2024 Survey (1,141 Australians): Australians are most concerned about AI risks where AI acts unsafely, is misused, or displaces jobs. They judge “preventing dangerous and catastrophic outcomes from AI” the #1 priority for the Australian Government in AI; 9 in 10 Australians support creating a new regulatory body for AI.

The majority of Australians (8 in 10) support the statement that “mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war”.

What This Means for Australia

Strong public mandate for action: With 9 in 10 Australians supporting a new regulatory body for AI, and “preventing dangerous and catastrophic outcomes from AI” rated as the top government priority, there is overwhelming public support for robust AI governance.

Risk awareness is broad: Australians recognize diverse AI risks—from current harms like misinformation and job displacement to potential catastrophic outcomes. This comprehensive risk awareness supports a governance framework that addresses both near-term and long-term challenges.

Global perspective: The strong support (8 in 10 Australians) for treating AI extinction risk as a global priority alongside pandemics and nuclear war shows public understanding that AI governance requires international cooperation and serious attention.

How to Read This Research

- 2-Page Summary (PDF) - Key findings

- 2-Page Summary (Google Doc) - Editable version for sharing and commenting

- 2024 Technical Report (interactive report) - Full findings with data visualizations, read online

- 2024 Technical Report (SSRN PDF) - Published academic version for citation

Suggested citation: Saeri, A.K., Noetel, M., & Graham, J. (2024). Survey Assessing Risks from Artificial Intelligence: Technical Report. SSRN. http://dx.doi.org/10.2139/ssrn.4750953

Australians are concerned about diverse risks from AI

Australians support regulatory and non-regulatory action to address AI risks